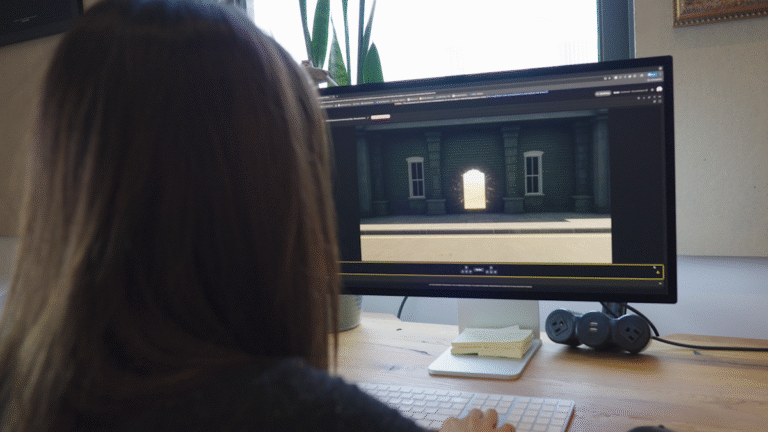

At start of December, Google DeepMind released Genie 2. The Genie family of AI systems are what are known as world models. They’re capable of generating images as the user — either a human or, more likely, an automated AI agent — moves through the world the software is simulating. The resulting video of the model in action may look like a video game, but DeepMind has always positioned Genie 2 as a way to train other AI systems to be better at what they’re designed to accomplish. With its new Genie 3 model, which the lab announced on Tuesday, DeepMind believes it has made an even better system for training AI agents.

At first glance, the jump between Genie 2 and 3 isn’t as dramatic as the one the model made last year. With Genie 2, DeepMind’s system became capable of generating 3D worlds, and could accurately reconstruct part of the environment even after the user or an AI agent left it to explore other parts of the generated scene. Environmental consistency was often a weakness of prior world models. For instance, Decart’s Oasis system had trouble remembering the layout of the Minecraft levels it would generate.

By comparison, the enhancements offered by Genie 3 seem more modest, but in a press briefing Google held ahead of today’s official announcement, Shlomi Fruchter, research director at DeepMind, and Jack Parker-Holder, research scientist at DeepMind, argued they represent important stepping stones in the road toward artificial general intelligence.

Google DeepMind

So what exactly does Genie 3 do better? To start, it outputs footage at 720p, instead of 360p like its predecessor. It’s also capable of sustaining a “consistent” simulation for longer. Genie 2 had a theoretical limit of up to 60 seconds, but in practice the model would often start to hallucinate much earlier. By contrast, DeepMind says Genie 3 is capable of running for several minutes before it starts producing artifacts.

Also new to the model is a capability DeepMind calls “promptable world events.” Genie 2 was interactive insofar as the user or an AI agent was able to input movement commands and the model would respond after it had a few moments to generate the next frame. Genie 3 does this work in real-time. Moreover, it’s possible to tweak the simulation with text prompts that instruct Genie to alter the state of the world it’s generating. In a demo DeepMind showed, the model was told to insert a herd of deer into a scene of a person skiing down a mountain. The deer didn’t move in the most realistic manner, but this is the killer feature of Genie 3, says DeepMind.

Google DeepMind

As mentioned before, the lab primarily envisions the model as a tool for training and evaluating AI agents. DeepMind says Genie 3 could be used to teach AI systems to tackle “what if” scenarios that aren’t covered by their pre-training. “There are a lot of things that have to happen before a model can be deployed in the real world, but we do see it as a way to more efficiently train models and increase their reliability,” said Fruchter, pointing to, for example, a scenario where Genie 3 could be used to teach a self-driving car how to safely avoid a pedestrian that walks in front of it.

Despite the improvements DeepMind has made to Genie, the lab acknowledges there’s much work to be done. For instance, the model can’t generate real-world locations with perfect accuracy, and it struggles with text rendering. Moreover, for Genie to be truly useful, DeepMind believes the model needs to be able to sustain a simulated world for hours, not minutes. Still, the lab feels Genie is ready to make a real-world impact.

“We already at the point where you wouldn’t use [Genie] as your sole training environment, but you can certainly finds things you wouldn’t want agents to do because if they act unsafe in some settings, even if those settings aren’t perfect, it’s still good to know,” said Parker-Holder. “You can already see where this is going. It will get increasingly useful as the models get better.”

For the time being, Genie 3 isn’t available to the general public. However, DeepMind says it’s working to make the model available to additional testers.